This post is about counterfactuals in the probit model. I wrote this while reading Pearl et al’s 2016 book Causal Inference in Statistics: A Primer.

The probit model with one normally distributed covariate can be written like this:

Now we wish to find the density of the counterfactual , see Pearl et al. (2016) for definitions. The density of given and is

or the normal density truncated to , or .

Probability of Necessity

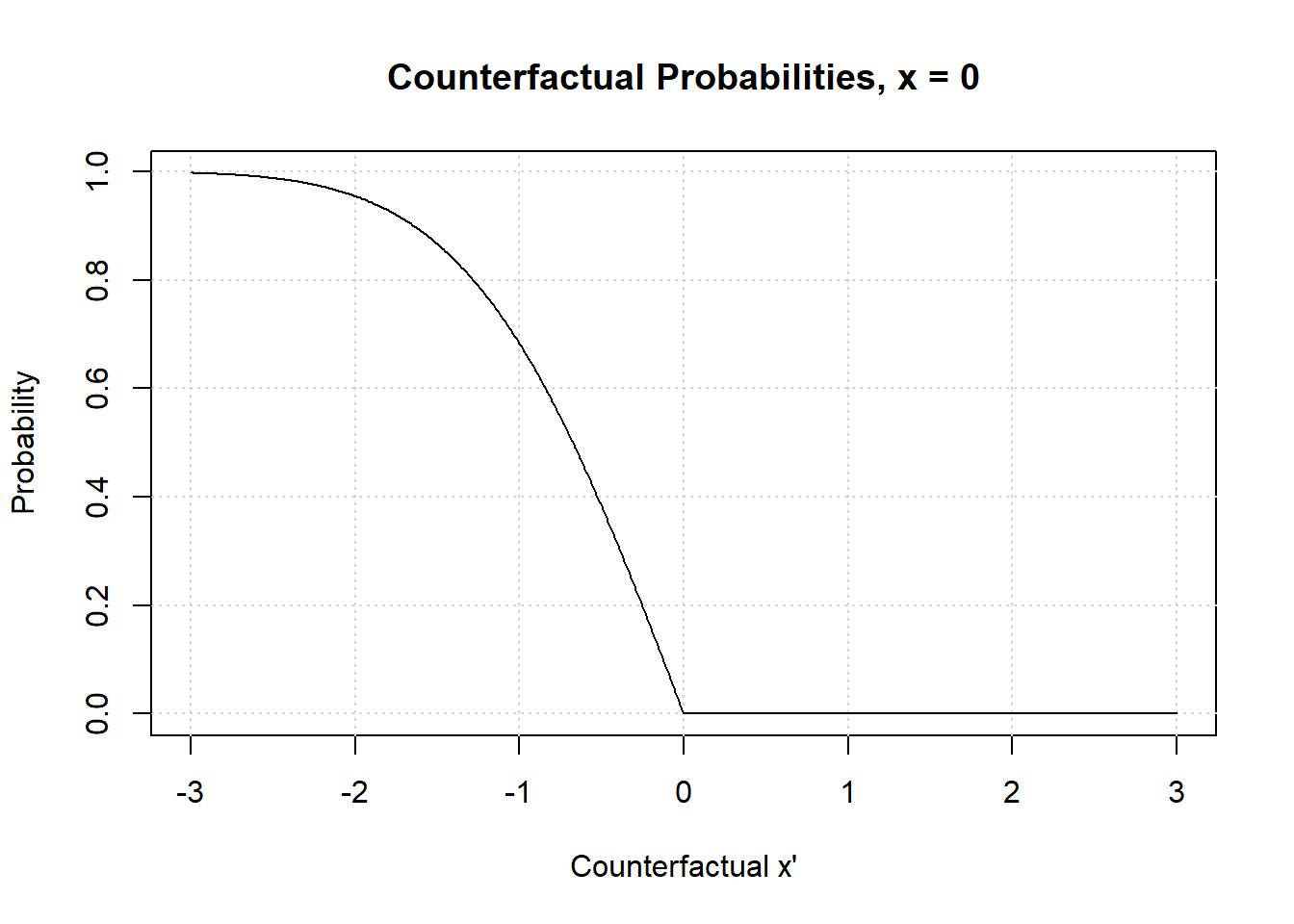

Now we’ll take a look at the probability of necessity. The most obvious way to generalize the probability of necessity to continuous distributions is to allow the counterfactual to be a parameter of the counterfactual , like this:

Let’s plot this function.

x <- seq(-3, 3, by = 0.01)

x_hat <- 0

ps <- function(x, x_hat) 1 - pmin(pnorm(x_hat), pnorm(x))/pnorm(x_hat)

plot(x = x, y = ps(x, x_hat),

type = "l",

xlab = "Counterfactual x'",

ylab = "Probability",

main = "Counterfactual Probabilities, x = 0")

grid()

lines(x = x, y = ps(x, x_hat), type = "l")

There is nothing too strange. The probability of necessity goes to as , which is what you would expect. For the probability of becomes really slim as . When the probability of necessity is , as you will always observe counterfactually when if you observed with

Integrated Probability of Necessity

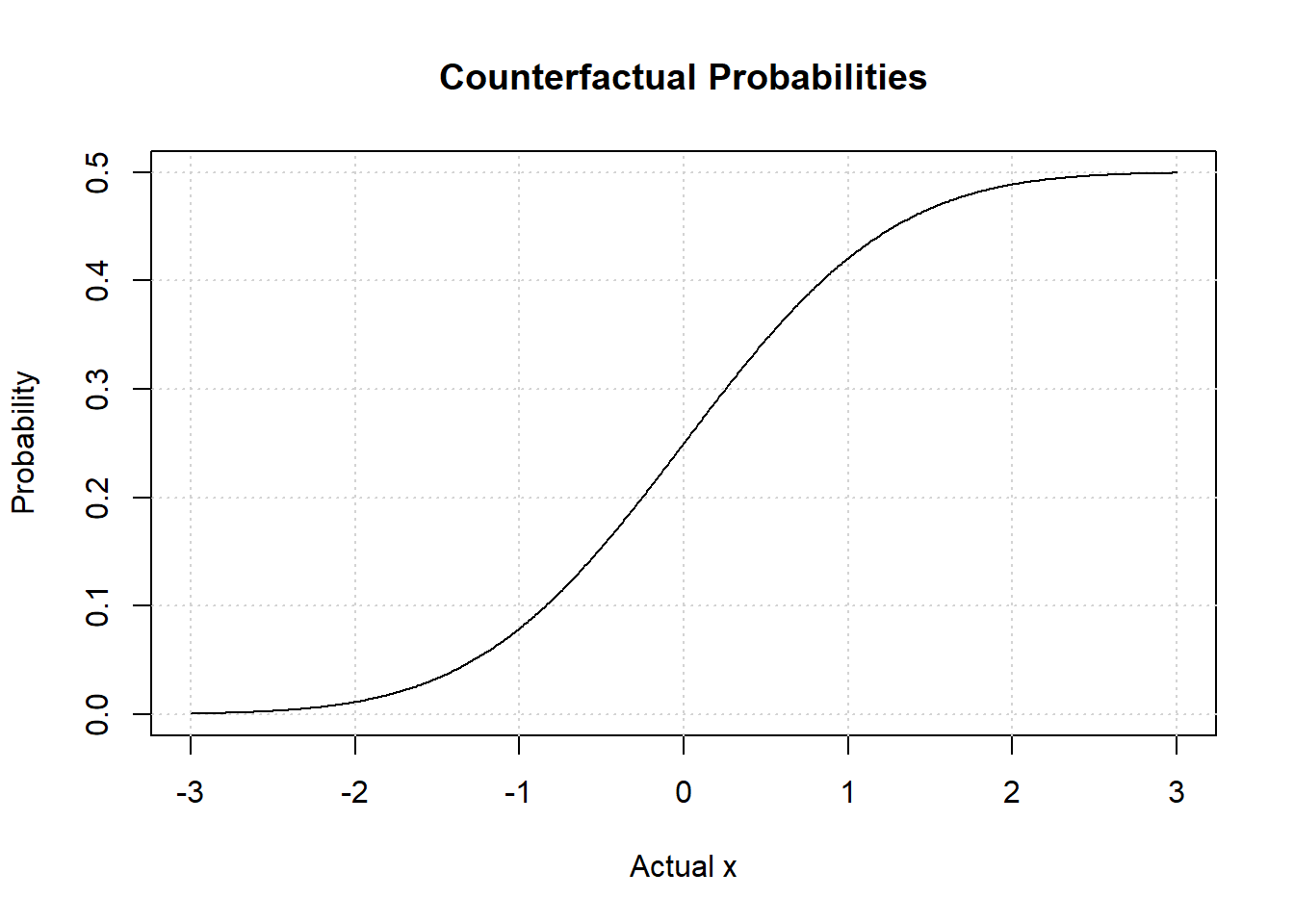

A more complicated question is: What rôle did the fact that have in ? Or, if wasn’t , what would have been? With some abuse of notation, answers this question, where is an independent copy of .

Now let’s plot this.

counter = function(x) 0.5*pnorm(x)

plot(x = x,

y = counter(x),

type = "l",

xlab = "Actual x",

ylab = "Probability",

main = "Counterfactual Probabilities")

grid()

lines(x = x,

y = counter(x),

type = "l")

Notice the asymptote at . No matter how large we observe together with , we can never be more than certain that if we were to draw an once again. The asymptote at if we had observed at very small value together with , and we were to draw again, we would be really certain that would happen once again.